Prompt Testing for Claude

Anthropic has introduced new prompt testing and evaluation features for its Claude 3.5 Sonnet language model, accessible through the Anthropic Console 1 4. Developers can now generate, test, and assess prompts using the built-in prompt generator, allowing them to optimize inputs and improve Claude's responses for specific tasks 2. The new Evaluate tab enables users to test prompts across various scenarios by uploading real-world examples or generating AI-driven test cases 3. This functionality streamlines the prompt engineering process, allowing developers to compare different prompts side-by-side and rate responses on a five-point scale 1 2. While these tools may not entirely replace prompt engineers, they aim to assist both novice and experienced users in rapidly improving their AI applications' performance 2 3.

AMD Acquires Silo AI

AMD has announced plans to acquire Silo AI, Europe's largest private AI lab, for approximately $665 million in an all-cash transaction 1 2. This strategic move aims to enhance AMD's AI capabilities and strengthen its position in the competitive AI hardware market 3. Silo AI, founded in 2017 and headquartered in Helsinki, Finland, brings extensive experience in developing tailored AI models, platforms, and solutions for leading enterprises 1. The acquisition is expected to accelerate AMD's AI strategy by providing access to top-tier AI talent, including over 300 AI experts with 125 PhDs, and expertise in large language models (LLMs), MLOps, and AI integration solutions 2. This marks AMD's third AI-focused acquisition within a year, following Mipsology and Nod.ai, as part of its efforts to build an end-to-end silicon-to-services platform and close the gap with market leader NVIDIA 2 4.

Oracle's HeatWave GenAI Innovations

Oracle has announced the general availability of HeatWave GenAI, a database-as-a-service platform that introduces innovative features for generative AI applications. A key highlight is the integration of in-database large language models (LLMs), including quantized versions of Llama 3 and Mistral, which Oracle claims is an industry first 3 4. This approach allows organizations to deploy LLMs directly where their data resides, using standard CPUs rather than GPUs, potentially reducing infrastructure costs 2. HeatWave GenAI also includes an automated vector store that simplifies the process of converting existing data into vector embeddings, enabling semantic search and other natural language applications without requiring extensive developer expertise 3. These features, combined with HeatWave's existing capabilities like AutoML, aim to streamline the development of AI-powered applications and provide more synergy between different AI functionalities within the database environment 3 4.

Chinese AI Model Advancements

China's AI giants Alibaba and SenseTime have recently unveiled new AI models, intensifying competition in the country's rapidly evolving AI landscape. SenseTime introduced SenseNova 5.5, claiming a 30% performance improvement over its predecessor and surpassing GPT-4 in several key metrics 1. The company also launched multimodal and terminal-based models, demonstrating advanced cross-modal information integration capabilities 1. Meanwhile, Alibaba Cloud reported significant growth in its Tongyi Qianwen model, with downloads doubling to over 20 million in two months 1. The company emphasized its commitment to open-source initiatives, aiming to narrow the gap between open-source and closed-source models 1. These developments highlight the fierce competition among Chinese tech companies to establish dominance in the domestic AI market, with both firms leveraging major industry events to showcase their progress 1 2.

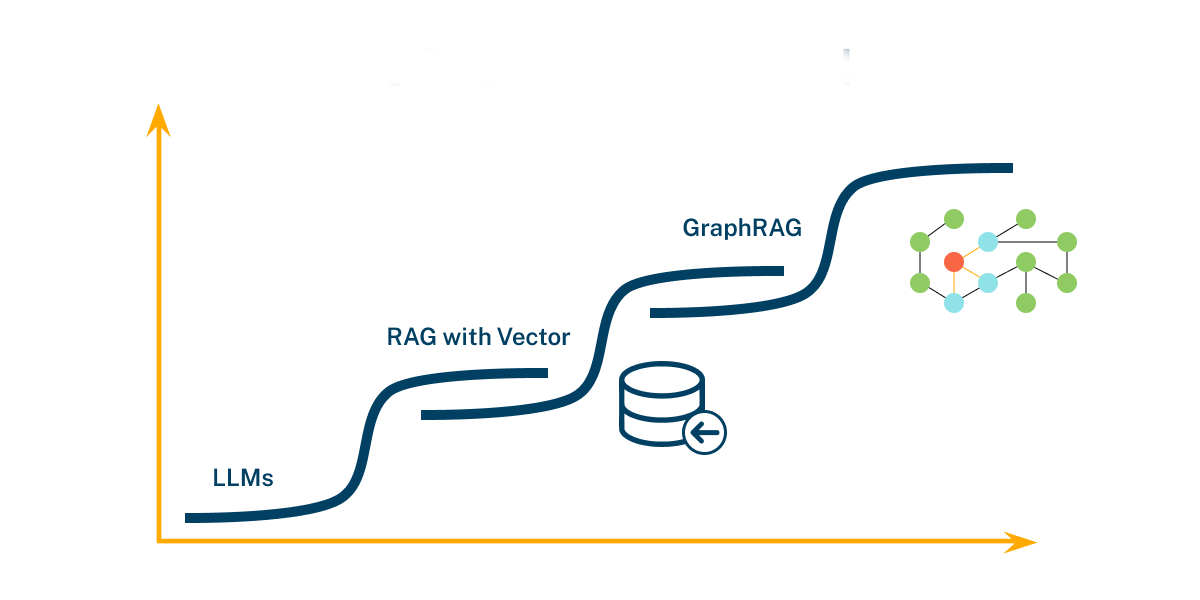

Microsoft's Graph-Based RAG

Microsoft Research has introduced GraphRAG, a novel approach to Retrieval Augmented Generation (RAG) that leverages knowledge graphs to enhance reasoning capabilities over complex information and private datasets 1 3. Unlike traditional RAG methods that rely on vector similarity searches, GraphRAG uses Large Language Models (LLMs) to automatically extract rich knowledge graphs from text documents 3. This approach creates a hierarchical structure of "communities" within the data, allowing for more comprehensive and diverse responses to queries 3. GraphRAG has demonstrated superior performance in answering holistic questions about large datasets and connecting disparate pieces of information, outperforming baseline RAG methods in comprehensiveness and diversity with a 70-80% win rate 3. Microsoft has made GraphRAG publicly available on GitHub, along with a solution accelerator for easy deployment on Azure, aiming to make graph-based RAG more accessible for users dealing with complex data discovery tasks 3.

LLM Search Integration

Recent developments in integrating search capabilities with Large Language Models (LLMs) have led to two distinct approaches: Search4LLM and LLM4Search. Search4LLM enhances LLMs by incorporating external search capabilities, allowing models to access up-to-date information beyond their training data. This approach is exemplified by projects like pyLLMSearch, which offers advanced RAG (Retrieval-Augmented Generation) systems with features such as hybrid search, deep linking, and support for multiple document collections 1. Conversely, LLM4Search explores using LLMs to improve search experiences, as demonstrated by the ONS's "StatsChat" project, which uses embedding search and generative question-answering to provide more relevant and context-aware search results 2. However, challenges remain in implementing web search for local LLMs, with current solutions often relying on pre-indexed datasets rather than real-time web browsing 3 4. Additionally, using LLMs solely for search presents efficiency concerns, leading to the development of hybrid approaches like Retrieval Augmented LLMs (raLLM) that combine traditional information retrieval systems with LLM capabilities 5.

Amazon's RAG Benchmark

Amazon's AWS researchers have proposed a new benchmarking process to evaluate the performance of Retrieval-Augmented Generation (RAG) systems in answering domain-specific questions. The method, detailed in a paper titled "Automated Evaluation of Retrieval-Augmented Language Models with Task-Specific Exam Generation," aims to provide a standardized, scalable, and interpretable approach to scoring different RAG systems 2. The benchmark generates multiple-choice exams tailored to specific document corpora, testing large language models (LLMs) in closed-book, "Oracle" RAG, and classical retrieval scenarios 2. Key findings from the study suggest that optimizing RAG algorithms can lead to performance improvements surpassing those achieved by simply using larger LLMs, highlighting the importance of efficient retrieval methods in AI development 2. Additionally, the research emphasizes the potential risks of poorly aligned retriever components, which can degrade LLM performance compared to non-RAG versions 2.